Hi HPC Team

I was hoping you might be able to help me find a solution to a problem related to slow disk i/o from scratch during jobs.

A lot of our deep learning jobs in the group involve training set’s too large to fit into memory. The solution is therefore to stream it from disk during training. We use scratch for this as the datasets are particularly large.

This is standard practice and the functionality is built into many of the machine learning libraries we use like PyTorch.

A good example is this the PyTorch image folder dataset

During training, some threads asynchronously load batches of images from the disk and provide them to the GPU when called.

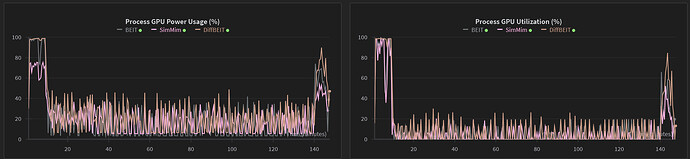

When I tried to use this method for training some of our models this morning (as the cluster came online), the throughput was super high, the GPU was being fully utilized. However around 10 minutes later as others started using the cluster, the streaming became a significant bottleneck and now GPU utilization during the job is sitting at around 5-10%. This plot shows the rapid decline in GPU usage.

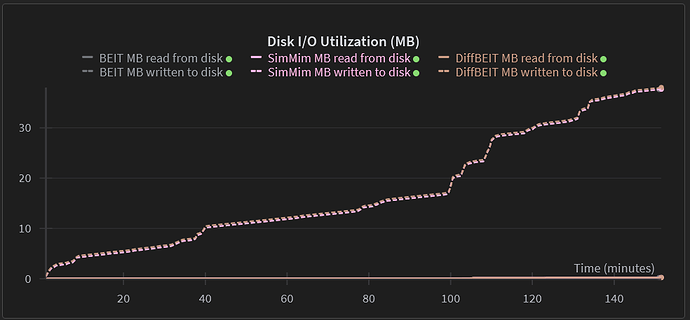

The inconsistent i/o usage can be seen here:

These jobs were launched with the following script:

#!/bin/sh

#SBATCH --cpus-per-task=16

#SBATCH --mem=32GB

#SBATCH --time=7-00:00:00

#SBATCH --job-name=train_diffbeit

#SBATCH --output=/home/users/l/leighm/DiffBEIT/logs//%A_%a.out

#SBATCH --chdir=/home/users/l/leighm/DiffBEIT/scripts

#SBATCH --partition=shared-gpu,private-dpnc-gpu

#SBATCH --gres=gpu:ampere:1,VramPerGpu:20G

#SBATCH -a 0-2

network_name=( DiffBEIT BEIT SimMim )

model_target_=( src.models.diffbeit.DiffBEIT src.models.diffbeit.ClassicBEIT src.models.diffbeit.RegressBEIT )

export XDG_RUNTIME_DIR=""

srun apptainer exec --nv -B /srv,/home \

/home/users/l/leighm/scratch/Images/anomdiff-image_latest.sif \

python train.py \

network_name=${network_name[`expr ${SLURM_ARRAY_TASK_ID} / 1 % 3`]} \

model._target_=${model_target_[`expr ${SLURM_ARRAY_TASK_ID} / 1 % 3`]} \

So right now I am just looking for ways to solve this. Is there an efficient way to move the data from scratch? Copying it directly to the compute node might be an option but it would result in a huge overhead when starting the job. Is there a way to better utilize the scratch i/o? Are there some nodes that are faster on scratch?

Any help is greatly appreciated.

Matthew Leigh