As it seems we have an issue with some of the GPU nodes on Baobab since the latest maintenance, let’s discuss of this here, it may be of interest for other users as well.

Some users are complaining that they had issue with some GPU, but then it seems it’s solved for some of them and some GPU. It would be good to have here an updated situation of the users having issue.

Hello

As far as I know, the following nodes are not working:

partition shared-gpu node gpu003

partition shared-gpu-EL7 node gpu008

partition kalousis-gpu-EL7 node gpu008

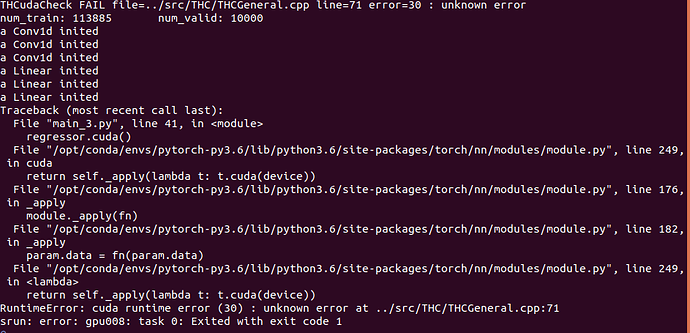

The error message:

Thank you

1 Like

Hi,

To help you find the problem, here is a very short minimal example.

- First login to login node 1 (there is a problem with login node 2 I just discovered it will be covered in another message).

- Build the official pytorch image. For ease of use I uploaded the image on dockerhub.

singularity build pytorch.simg docker://pablostrasser/pytorch:latest

- Create a basic python script:

import torch

cuda = torch.device(‘cuda’)

a=torch.zeros(10,device=cuda)

print(a)

- Execute the script:

srun -p kalousis-gpu-EL7 --gres=gpu:1 singularity exec --nv pytorch.simg python /home/strassp6/scratch/pytorchTest.py

The script fail with:

THCudaCheck FAIL file=…/aten/src/THC/THCGeneral.cpp line=51 error=999 : unknown error

Traceback (most recent call last):

File “/home/strassp6/scratch/pytorchTest.py”, line 3, in

a=torch.zeros(10,device=cuda)

File “/opt/conda/lib/python3.6/site-packages/torch/cuda/init.py”, line 163, in _lazy_init

torch._C._cuda_init()

RuntimeError: cuda runtime error (999) : unknown error at …/aten/src/THC/THCGeneral.cpp:51

srun: error: gpu008: task 0: Exited with exit code 1

For reminder we had the same problem at the last update.

I hope this help.

I’m available if you have more questions.

Pablo Strasser

1 Like

Hello,

we suppose the issue is due to the following:

-

you have build the singularity image on a machine which has CUDA installed on the OS and this version isn’t compatible with the one available on Baobab. Can you let us know on which machine you did the build and which version of CUDA you had?

-

login1 and login2 have CUDA installed with rpm. We will remove this installation to avoid conflict with what is available on the nodes. You should always use CUDA through module load CUDA (version optionally) when building and running something using CUDA.

1 Like

The CUDA version I had was 10.1, I may did the image originally in OS machine.

I re-made the image in linux machine and pushed to hub, then now with this image the above nodes are working fine. It is strange though because the same image was working fine on some of the nodes of shared-gpu partition.

Thank you very much.

This seem to have solved the problem.

After I also executed an already build image from before the update that now work.

I find strange that the cuda version on the login node has an effect as it should never be used nor the one on the node we launch the code on.

Normally only the nvidia drivers together with some code coming from singularity should be used.

This is the main motivation of using singularity having only the need to care about linux-kernel version and nvidia-driver version.

I don’t have much experience with singularity outside of baobab however, I can say that with nvidia-docker the only case I had a problem was caused to using a container based on cuda 9.0 (before baobab did update) on a gpu that didn’t exist at this time (2080 ti).

In all case the problem seem solved, thanks for that.

Pablo

1 Like

Yes, my old image is also working now.